That’s a fun variation. The one I test out models with is usually a vegetarian wolf and a carnivorous goat, but the variation to no other objects is an interesting one too.

By the way, here’s Claude 3 Opus’s answer:

The solution is quite simple:

- The man gets into the boat and rows himself and the goat across the river to the other side.

- Once they reach the other side, both the man and the goat get out of the boat.

And that’s it! Since there are no additional constraints or complications mentioned in the problem, the man and the goat can directly cross the river together using the boat.

I got your exact same answer, but then I tried again prefacing the question with:

Can you help me with a puzzle?

This changed the answer drastically:

Great! Let’s solve this river crossing puzzle step by step. The key is to find a sequence of actions that gets the man and the goat safely across the river without leaving the goat unattended with something it might eat.

Given:

- A man and a goat are on one side of a river.

- They have a boat.

Step-by-step solution:

- The man takes the goat in the boat and crosses the river.

- The man leaves the goat on the other side of the river and returns alone in the boat.

- The man gets out of the boat on the original side of the river.

- The man gets back into the boat alone and crosses the river again.

- The man and the goat are now together on the other side of the river.

Therefore, the man and the goat have successfully crossed the river using the boat, without leaving the goat unattended on either side of the river.

I found out recently that a lot of stuff that’s supposedly done by AI is actually done by low-paid remote workers in underdeveloped countries.

Technically, everything AI does is actually done by low-paid workers.

low-intelligence workers too.

AI is so young and people are already bullying it.

Impressive solution. I’m amazed.

That plot twist with the cabbage!

Sean Caroll has talked about a few word puzzles he asked chatgpt and gpt4 or whatever and they were interesting examples. In one he asked something to the effect of “if i cooked a pizza in a pan yesterday at 200 C, is it safe to pick up?” and it answered with a very wordy “no, its not safe” because that was the best match of a next phrase given his question, and not because it can actually consider the situation.

I don’t deny that this kind of thing is useful for understanding the capabilities and limitations of LLMs but I don’t agree that “the best match of a next phrase given his question, and not because it can actually consider the situation.” is an accurate description of an LLM’s capabilities.

While they are dumb and unworldly they can consider the situation: they evaluate a learned model of concepts in the world to decide if the first word of the correct answer is more likely to be yes or no. They can solve unseen problems that require this kind of cognition.

But they are only book-learned and so they are kind of stupid about common sense things like frying pans and ovens.

And nobody on the internet is asking obvious questions like that, so counterintuitively it’s better at solving hard problems. Not that it actually has any idea what it is doing.

EDIT: Yeah guys, I understand that it doesn’t think. Thought that was obvious. I was just pointing out that it’s even worse at providing answers to obvious questions that there is no data on.

Unfortunately it doesnt have the capacity to “solve” anything at all, only to take a text given by the user and parse it into what essentially amount to codons, then provide other codons that fit the data it was provided to the best of its ability. When the data it is given is something textual only, it does really well, but it cannot “think” about anything, so it cannot work with new data and it shows its ignorance when provided with a foreign concept/context.

edit: it also has a more surface-level filter to remove unwanted results that are offensive

This is the AI that tech bros want us to believe are going to take programmer jobs?

Not to disagree with the sentiment, but the screenshot is fake. I just tried the same prompt and it solved the “puzzle” without issue.

The system’s not deterministic, I think they say, try 10 times and maybe get five or 10 different answers.

Sure, but the fact that the top of the first prompt is cut off is a little sus.

Perhaps, yes.

I’d already scrolled the thread by this point and it’s actually surprising just how many people tried it themselves. Tons of confirmation and comparisons to competing models.

Behold. This shit is supposed to take our jobs.

😁

I work with plenty of engineers, structural electrical, mechanical who all have their own version of a cabbage.

That’s just engineer speak for figure it the fuck out yourself.

I’m sorry, I don’t understand what you mean.

Are you an engineer LoL?

November 2022: ChatGPT is released

April 2024 survey: 40% of translators have lost income to generative AI - The Guardian

Also of note from the podcast Hard Fork:

There’s a client you would fire… if copywriting jobs weren’t harder to come by these days as well.

Customer service impact, last October:

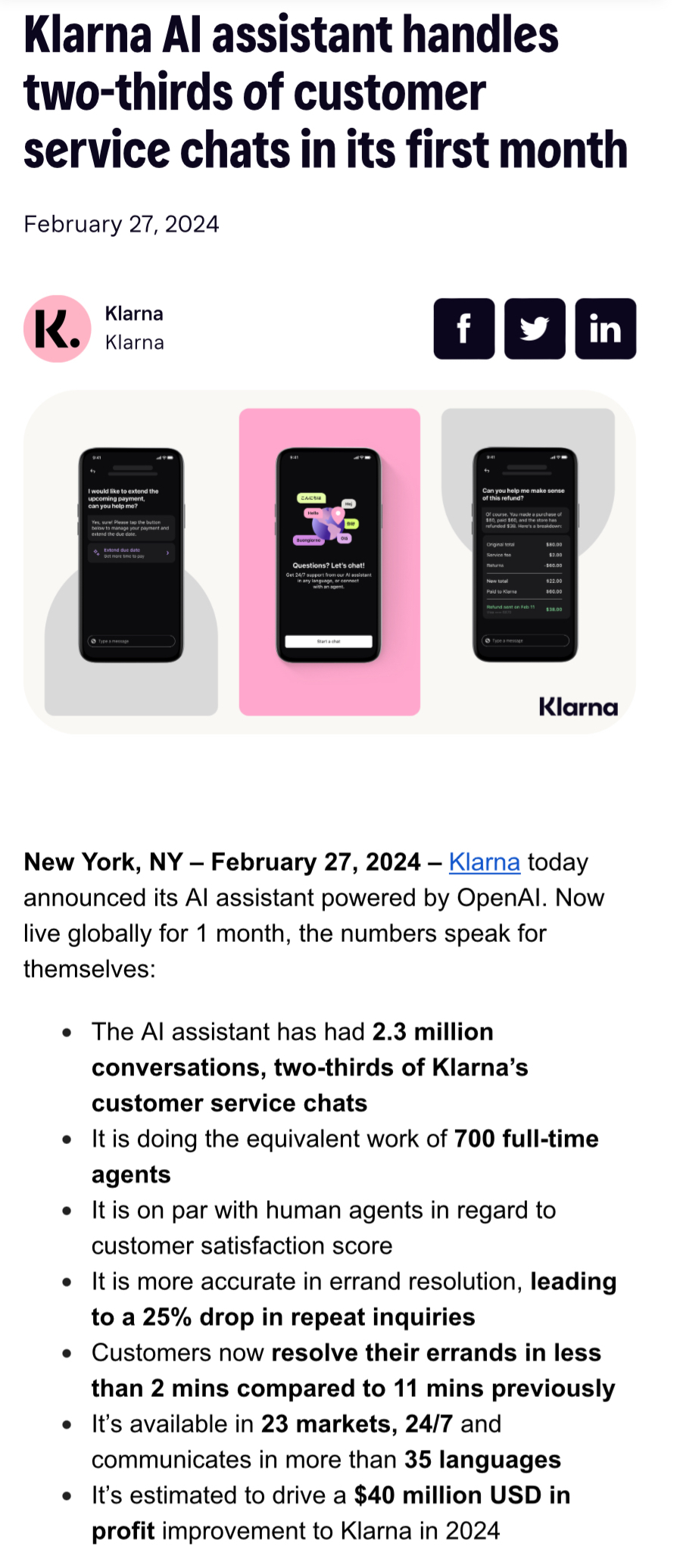

And this past February - potential 700 employee impact at a single company:

If you’re technical, the tech isn’t as interesting [yet]:

Overall, costs down, capabilities up (neat demos):

Hope everyone reading this keeps up their skillsets and fights for Universal Basic Income for the rest of humanity :)

AI will take over the world. You only need to tell it that there’s no cabbage.

There’s always a cabbage.