It’s reached 333 protesters! that’s 1/3 of the way to 1000, it’d be cool if it kept on increasing :)

Thank you for the neat examples! :) I think I get it now.

Thank you! What you wrote confused me at first, I thought that using @something created a post that was only visible to user something – like a direct message. Now I’ve re-read the help pages, and I see that there’s a second “” at the bottom of the post field, to make the post only visible to something; otherwise (globe icon) is public.

May I ask: in this latter case, what does @something achieve then? is it a sort of “user mention”, so the user is notified to have been mentioned in a public post? Will other users interested in something see it then?

[Edit: I realize that my question was phrased in a completely misleading way. Corrected now.]

Cheers!

Nothing dense in this, I don’t quite know what to write either. In my opinion what you wrote in your comment is just perfect, you’re a citizen simply expressing an honest concern, without lying – not all people are tech-savvy. It also makes it clear that it’s a letter from a real person.

But that’s only my point of view, and maybe I haven’t thought enough steps ahead. Let’s see what other people suggest and why.

From this github comment:

If you oppose this, don’t just comment and complain, contact your antitrust authority today:

This is actually already implemented, see here.

(also @[email protected])

Maybe it is pointless, maybe it is a bad idea. Maybe not. It’s difficult to predict what this kind of small-scale actions will have on the big picture and future development. No matter what you choose or not choose to do, it’s always a gamble. My way of thinking is that it’s good if people say, through this kind of gestures, “I’m vigilant, I won’t allow just anything to be done to me. There’s a line that shouldn’t be crossed”.

Of course you’re right about supporting and choosing alternative browsers, and similar initiatives. There are many initiatives on that front as well. I’ve never used Chrome, to be honest; always Firefox. But now I’ve even uninstalled the Chromium that came pre-installed on my (Ubuntu) machines. Besides that I ditched gmail years ago, and I’ve also decided to flatly refuse to use Google tools (Google docs and whatnot) with collaborators, as a matter of principle. If that means I’m cut out of projects, so be it.

Regarding WEI, I see your point, but I see dangers in “acknowledging” too much. If you read the “explainer” by the Google engineers, or in general their replies to comments and criticisms, you see that they constantly use deceiving, manipulative, and evasive language. As an example, the “explainer” says a lot “the user needs this”, “the user desires that”, but when you unfold the real meaning of the sentences it’s clear it isn’t something done for the user.

This creates a need for human users to prove to websites that they’re human

Note the “need for human users”, but the sentence actually means “websites need that users prove…”. This is just an example. The whole explainer is written in such a deceiving manner.

The replies to criticisms are all evasive. They don’t reply the actual questions or issues, they start off a tangent and spout a lot of blah blah with “benefit”, “user”, and other soothing words – but the actual question or issue never gets addressed. (Well, if this isn’t done on purpose, then it means they are mentally impaired, with sub-normal comprehension skills).

I fuc*ing hate this kind of deceiving, politician talk – which is a red flag that they’re up to no good – and I know from personal experience that as soon as you “acknowledge” something, they’ll drag your into their circular, empty blabber while they do what they please.

More generally, I think we should do something against the current ad-based society and economy. So NO to WEI for me.

Agree (you made me think of the famous face on Mars). I mean that more as a joke. Also there’s no clear threshold or divide on one side of which we can speak of “human intelligence”. There’s a whole range from impairing disabilities to Einstein and Euler – if it really makes sense to use a linear 1D scale, which very probably doesn’t.

Here?: https://ungoogled-software.github.io/about/

Looks like a good project, I didn’t know about its existence.

Yes, the purpose isn’t sabotaging.

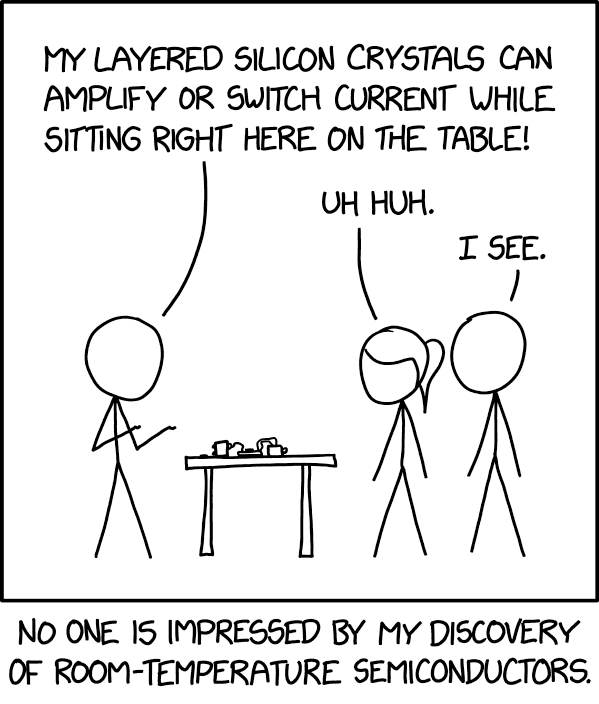

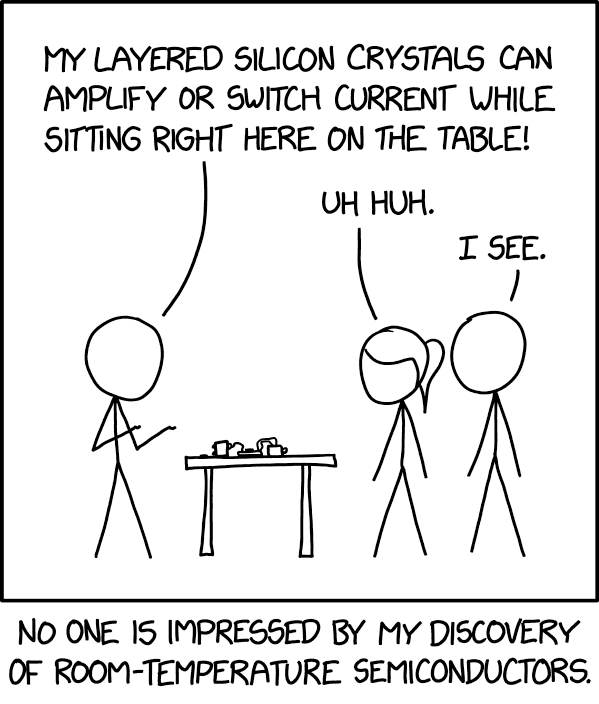

Title:

ChatGPT broke the Turing test

Content:

Other researchers agree that GPT-4 and other LLMs would probably now pass the popular conception of the Turing test. […]

researchers […] reported that more than 1.5 million people had played their online game based on the Turing test. Players were assigned to chat for two minutes, either to another player or to an LLM-powered bot that the researchers had prompted to behave like a person. The players correctly identified bots just 60% of the time

Complete contradiction. Trash Nature, it’s become only an extremely expensive gossip science magazine.

PS: The Turing test involves comparing a bot with a human (not knowing which is which). So if more and more bots pass the test, this can be the result either of an increase in the bots’ Artificial Intelligence, or of an increase in humans’ Natural Stupidity.

There’s an ongoing protest against this on GitHub, symbolically modifying the code that would implement this in Chromium. See this lemmy post by the person who had this idea, and this GitHub commit. Feel free to “Review changes” –> “Approve”. Around 300 people have joined so far.

Yeah that’s bullsh*t by the author of the article.

This is so cool! Not just the font but the whole process and study. Please feel free to cross-post to Typography & fonts.

I’d like to add one more layer to this great explanation.

Usually, this kind of predictions should be made in two steps:

calculate the conditional probability of the next word (given the data), for all possible candidate words;

choose one word among these candidates.

The choice in step 2. should be determined, in principle, by two factors: (a) the probability of a candidate, and (b) also a cost or gain for making the wrong or right choice if that candidate is chosen. There’s a trade-off between these two factors. For example, a candidate might have low probability, but also be a safe choice, in the sense that if it’s the wrong choice no big problems arise – so it’s the best choice. Or a candidate might have high probability, but terrible consequences if it were the wrong choice – so it’s better to discard it in favour of something less likely but also less risky.

This is all common sense! but it’s at the foundation of the theory behind this (Decision Theory).

The proper calculation of steps 1. and 2. together, according to fundamental rules (probability calculus & decision theory) would be enormously expensive. So expensive that something like chatGPT would be impossible: we’d have to wait for centuries (just a guess: could be decades or millennia) to train it, and then to get an answer. This is why Large Language Models do two approximations, which obviously can have serious drawbacks:

they use extremely simplified cost/gain figures – in fact, from what I gather, the researchers don’t have any clear idea of what they are;

they directly combine the simplified cost/gain figures with probabilities;

They search for the candidate with the highest gain+probability combination, but stopping as soon as they find a relatively high one – at the risk of missing the one that was actually the real maximum.

(Sorry if this comment has a lecturing tone – it’s not meant to. But I think that the theory behind these algorithms can actually be explained in very common-sense term, without too much technobabble, as @TheChurn’s comment showed.)

Superb summary!

Unfortunately the original article is based on statistical methods that are today acknowledged, by a large number of statisticians, to be flawed (see this official statement and this editorial of the American Statistical Association). So the findings might be correct, and yet again they might not be.

Had never heard about Graphite, thank you! I’ll try to stay updated about it. But please feel free to post important news about it in this community, whenever there’ll be steps forward.

😂 Great choice! I have a friend who wanted to be a fence erector, but after seeing this infographic had a change of hearts.